En este artículo vamos a mostrar la arquitectura DenseNet. Ésta fue introducida en el año 2016, consiguiendo en 2017 el premio CVPR 2017 Best Paper Award. El siguiente enlace nos lleva al paper: https://arxiv.org/abs/1608.06993, pero aconsejo el artículo del blog Towards Data Science, pues explica muy bien su funcionamiento.

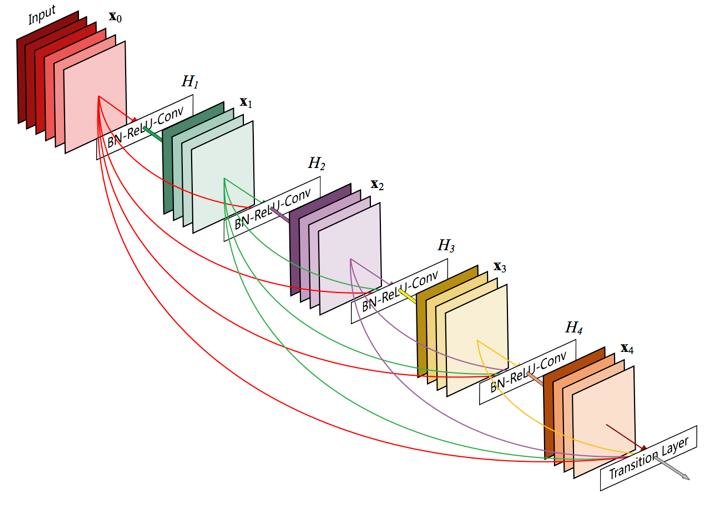

La idea, muy resumida, se basa en concatenar cada salida de las capas previas hacia las siguientes:

La imagen anterior representa un bloque denso de 5 capas con una tasa de crecimiento k = 4. Cada capa toma como entrada todos los valores de características anteriores.

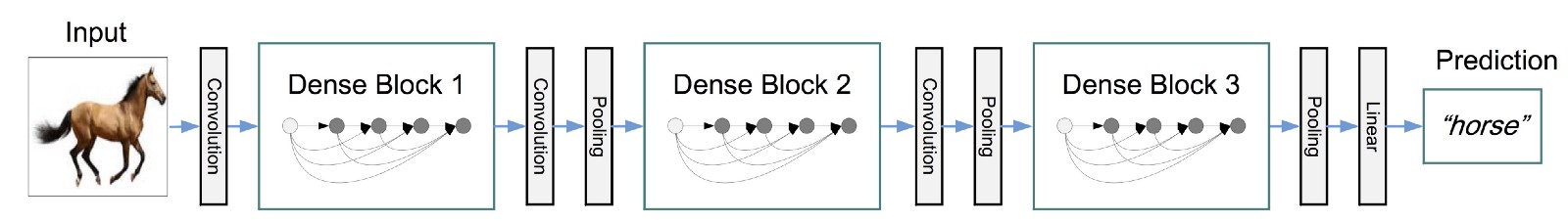

Como se puede ver en la imagen anterior, donde se representa una red de tres bloques densos, las capas entre dos bloques adyacentes hace referencia a una capa de transición cambiando el tamaño del mapa de características mediante convolución y pooling.

Entrenando la arquitectura DenseNet

Keras tiene a nuestra disposición ésta arquitectura, pero tiene la restricción de que, por defecto, el tamaño de las imágenes debe ser mayor a 187 píxeles, por lo que definiremos una arquitectura más pequeña.

from keras.applications import densenet

from keras.applications import imagenet_utils as imut

def CustomDenseNet(blocks,

include_top=True,

input_tensor=None,

weights=None,

input_shape=None,

pooling=None,

classes=1000):

# Determine proper input shape

input_shape = imut._obtain_input_shape(input_shape,

default_size=224,

min_size=32,

data_format=K.image_data_format(),

require_flatten=include_top,

weights=weights)

if input_tensor is None:

img_input = Input(shape=input_shape)

else:

if not K.is_keras_tensor(input_tensor):

img_input = Input(tensor=input_tensor, shape=input_shape)

else:

img_input = input_tensor

bn_axis = 3 if K.image_data_format() == 'channels_last' else 1

x = ZeroPadding2D(padding=((3, 3), (3, 3)))(img_input)

x = Conv2D(64, 7, strides=2, use_bias=False, name='conv1/conv')(x)

x = BatchNormalization(axis=bn_axis, epsilon=1.001e-5,

name='conv1/bn')(x)

x = Activation('relu', name='conv1/relu')(x)

x = ZeroPadding2D(padding=((1, 1), (1, 1)))(x)

x = MaxPooling2D(3, strides=2, name='pool1')(x)

x = densenet.dense_block(x, blocks[0], name='conv2')

x = densenet.transition_block(x, 0.5, name='pool2')

x = densenet.dense_block(x, blocks[1], name='conv3')

x = densenet.transition_block(x, 0.5, name='pool3')

x = densenet.dense_block(x, blocks[2], name='conv4')

x = densenet.transition_block(x, 0.5, name='pool4')

x = densenet.dense_block(x, blocks[3], name='conv5')

x = BatchNormalization(axis=bn_axis, epsilon=1.001e-5,

name='bn')(x)

if include_top:

x = GlobalAveragePooling2D(name='avg_pool')(x)

x = Dense(classes, activation='softmax', name='fc1000')(x)

else:

if pooling == 'avg':

x = GlobalAveragePooling2D(name='avg_pool')(x)

elif pooling == 'max':

x = GlobalMaxPooling2D(name='max_pool')(x)

# Ensure that the model takes into account

# any potential predecessors of `input_tensor`.

if input_tensor is not None:

inputs = imut.get_source_inputs(input_tensor)

else:

inputs = img_input

# Create model.

if blocks == [6, 12, 24, 16]:

model = Model(inputs, x, name='densenet121')

elif blocks == [6, 12, 32, 32]:

model = Model(inputs, x, name='densenet169')

elif blocks == [6, 12, 48, 32]:

model = Model(inputs, x, name='densenet201')

else:

model = Model(inputs, x, name='densenet')

# Load weights.

if weights == 'imagenet':

if include_top:

if blocks == [6, 12, 24, 16]:

weights_path = get_file(

'densenet121_weights_tf_dim_ordering_tf_kernels.h5',

DENSENET121_WEIGHT_PATH,

cache_subdir='models',

file_hash='0962ca643bae20f9b6771cb844dca3b0')

elif blocks == [6, 12, 32, 32]:

weights_path = get_file(

'densenet169_weights_tf_dim_ordering_tf_kernels.h5',

DENSENET169_WEIGHT_PATH,

cache_subdir='models',

file_hash='bcf9965cf5064a5f9eb6d7dc69386f43')

elif blocks == [6, 12, 48, 32]:

weights_path = get_file(

'densenet201_weights_tf_dim_ordering_tf_kernels.h5',

DENSENET201_WEIGHT_PATH,

cache_subdir='models',

file_hash='7bb75edd58cb43163be7e0005fbe95ef')

else:

if blocks == [6, 12, 24, 16]:

weights_path = get_file(

'densenet121_weights_tf_dim_ordering_tf_kernels_notop.h5',

DENSENET121_WEIGHT_PATH_NO_TOP,

cache_subdir='models',

file_hash='4912a53fbd2a69346e7f2c0b5ec8c6d3')

elif blocks == [6, 12, 32, 32]:

weights_path = get_file(

'densenet169_weights_tf_dim_ordering_tf_kernels_notop.h5',

DENSENET169_WEIGHT_PATH_NO_TOP,

cache_subdir='models',

file_hash='50662582284e4cf834ce40ab4dfa58c6')

elif blocks == [6, 12, 48, 32]:

weights_path = get_file(

'densenet201_weights_tf_dim_ordering_tf_kernels_notop.h5',

DENSENET201_WEIGHT_PATH_NO_TOP,

cache_subdir='models',

file_hash='1c2de60ee40562448dbac34a0737e798')

model.load_weights(weights_path)

elif weights is not None:

model.load_weights(weights)

return model

def create_densenet():

base_model = CustomDenseNet([6, 12, 16, 8], include_top=False, weights=None, input_tensor=None, input_shape=(32,32,3), pooling=None, classes=100)

x = base_model.output

x = GlobalAveragePooling2D(name='avg_pool')(x)

x = Dense(500)(x)

x = Activation('relu')(x)

x = Dropout(0.5)(x)

predictions = Dense(100, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=predictions)

return model

Compilamos como hasta ahora...

custom_dense_model = create_densenet()

custom_dense_model.compile(loss='categorical_crossentropy', optimizer='sgd', metrics=['acc', 'mse'])

Una vez hecho esto, vamos a ver un resumen del modelo creado:

custom_dense_model.summary()

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 32, 32, 3) 0

__________________________________________________________________________________________________

zero_padding2d_1 (ZeroPadding2D (None, 38, 38, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

conv1/conv (Conv2D) (None, 16, 16, 64) 9408 zero_padding2d_1[0][0]

__________________________________________________________________________________________________

conv1/bn (BatchNormalization) (None, 16, 16, 64) 256 conv1/conv[0][0]

__________________________________________________________________________________________________

conv1/relu (Activation) (None, 16, 16, 64) 0 conv1/bn[0][0]

__________________________________________________________________________________________________

zero_padding2d_2 (ZeroPadding2D (None, 18, 18, 64) 0 conv1/relu[0][0]

__________________________________________________________________________________________________

pool1 (MaxPooling2D) (None, 8, 8, 64) 0 zero_padding2d_2[0][0]

__________________________________________________________________________________________________

conv2_block1_0_bn (BatchNormali (None, 8, 8, 64) 256 pool1[0][0]

__________________________________________________________________________________________________

conv2_block1_0_relu (Activation (None, 8, 8, 64) 0 conv2_block1_0_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_1_conv (Conv2D) (None, 8, 8, 128) 8192 conv2_block1_0_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_1_bn (BatchNormali (None, 8, 8, 128) 512 conv2_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_1_relu (Activation (None, 8, 8, 128) 0 conv2_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_2_conv (Conv2D) (None, 8, 8, 32) 36864 conv2_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_concat (Concatenat (None, 8, 8, 96) 0 pool1[0][0]

conv2_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_0_bn (BatchNormali (None, 8, 8, 96) 384 conv2_block1_concat[0][0]

__________________________________________________________________________________________________

conv2_block2_0_relu (Activation (None, 8, 8, 96) 0 conv2_block2_0_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_1_conv (Conv2D) (None, 8, 8, 128) 12288 conv2_block2_0_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_1_bn (BatchNormali (None, 8, 8, 128) 512 conv2_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_1_relu (Activation (None, 8, 8, 128) 0 conv2_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_2_conv (Conv2D) (None, 8, 8, 32) 36864 conv2_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_concat (Concatenat (None, 8, 8, 128) 0 conv2_block1_concat[0][0]

conv2_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_0_bn (BatchNormali (None, 8, 8, 128) 512 conv2_block2_concat[0][0]

__________________________________________________________________________________________________

conv2_block3_0_relu (Activation (None, 8, 8, 128) 0 conv2_block3_0_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_1_conv (Conv2D) (None, 8, 8, 128) 16384 conv2_block3_0_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_1_bn (BatchNormali (None, 8, 8, 128) 512 conv2_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_1_relu (Activation (None, 8, 8, 128) 0 conv2_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_2_conv (Conv2D) (None, 8, 8, 32) 36864 conv2_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_concat (Concatenat (None, 8, 8, 160) 0 conv2_block2_concat[0][0]

conv2_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block4_0_bn (BatchNormali (None, 8, 8, 160) 640 conv2_block3_concat[0][0]

__________________________________________________________________________________________________

conv2_block4_0_relu (Activation (None, 8, 8, 160) 0 conv2_block4_0_bn[0][0]

__________________________________________________________________________________________________

conv2_block4_1_conv (Conv2D) (None, 8, 8, 128) 20480 conv2_block4_0_relu[0][0]

__________________________________________________________________________________________________

conv2_block4_1_bn (BatchNormali (None, 8, 8, 128) 512 conv2_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block4_1_relu (Activation (None, 8, 8, 128) 0 conv2_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block4_2_conv (Conv2D) (None, 8, 8, 32) 36864 conv2_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block4_concat (Concatenat (None, 8, 8, 192) 0 conv2_block3_concat[0][0]

conv2_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block5_0_bn (BatchNormali (None, 8, 8, 192) 768 conv2_block4_concat[0][0]

__________________________________________________________________________________________________

conv2_block5_0_relu (Activation (None, 8, 8, 192) 0 conv2_block5_0_bn[0][0]

__________________________________________________________________________________________________

conv2_block5_1_conv (Conv2D) (None, 8, 8, 128) 24576 conv2_block5_0_relu[0][0]

__________________________________________________________________________________________________

conv2_block5_1_bn (BatchNormali (None, 8, 8, 128) 512 conv2_block5_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block5_1_relu (Activation (None, 8, 8, 128) 0 conv2_block5_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block5_2_conv (Conv2D) (None, 8, 8, 32) 36864 conv2_block5_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block5_concat (Concatenat (None, 8, 8, 224) 0 conv2_block4_concat[0][0]

conv2_block5_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block6_0_bn (BatchNormali (None, 8, 8, 224) 896 conv2_block5_concat[0][0]

__________________________________________________________________________________________________

conv2_block6_0_relu (Activation (None, 8, 8, 224) 0 conv2_block6_0_bn[0][0]

__________________________________________________________________________________________________

conv2_block6_1_conv (Conv2D) (None, 8, 8, 128) 28672 conv2_block6_0_relu[0][0]

__________________________________________________________________________________________________

conv2_block6_1_bn (BatchNormali (None, 8, 8, 128) 512 conv2_block6_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block6_1_relu (Activation (None, 8, 8, 128) 0 conv2_block6_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block6_2_conv (Conv2D) (None, 8, 8, 32) 36864 conv2_block6_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block6_concat (Concatenat (None, 8, 8, 256) 0 conv2_block5_concat[0][0]

conv2_block6_2_conv[0][0]

__________________________________________________________________________________________________

pool2_bn (BatchNormalization) (None, 8, 8, 256) 1024 conv2_block6_concat[0][0]

__________________________________________________________________________________________________

pool2_relu (Activation) (None, 8, 8, 256) 0 pool2_bn[0][0]

__________________________________________________________________________________________________

pool2_conv (Conv2D) (None, 8, 8, 128) 32768 pool2_relu[0][0]

__________________________________________________________________________________________________

pool2_pool (AveragePooling2D) (None, 4, 4, 128) 0 pool2_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_0_bn (BatchNormali (None, 4, 4, 128) 512 pool2_pool[0][0]

__________________________________________________________________________________________________

conv3_block1_0_relu (Activation (None, 4, 4, 128) 0 conv3_block1_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_1_conv (Conv2D) (None, 4, 4, 128) 16384 conv3_block1_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_1_relu (Activation (None, 4, 4, 128) 0 conv3_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_concat (Concatenat (None, 4, 4, 160) 0 pool2_pool[0][0]

conv3_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_0_bn (BatchNormali (None, 4, 4, 160) 640 conv3_block1_concat[0][0]

__________________________________________________________________________________________________

conv3_block2_0_relu (Activation (None, 4, 4, 160) 0 conv3_block2_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_1_conv (Conv2D) (None, 4, 4, 128) 20480 conv3_block2_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_1_relu (Activation (None, 4, 4, 128) 0 conv3_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_concat (Concatenat (None, 4, 4, 192) 0 conv3_block1_concat[0][0]

conv3_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_0_bn (BatchNormali (None, 4, 4, 192) 768 conv3_block2_concat[0][0]

__________________________________________________________________________________________________

conv3_block3_0_relu (Activation (None, 4, 4, 192) 0 conv3_block3_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_1_conv (Conv2D) (None, 4, 4, 128) 24576 conv3_block3_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_1_relu (Activation (None, 4, 4, 128) 0 conv3_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_concat (Concatenat (None, 4, 4, 224) 0 conv3_block2_concat[0][0]

conv3_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_0_bn (BatchNormali (None, 4, 4, 224) 896 conv3_block3_concat[0][0]

__________________________________________________________________________________________________

conv3_block4_0_relu (Activation (None, 4, 4, 224) 0 conv3_block4_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_1_conv (Conv2D) (None, 4, 4, 128) 28672 conv3_block4_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_1_relu (Activation (None, 4, 4, 128) 0 conv3_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_concat (Concatenat (None, 4, 4, 256) 0 conv3_block3_concat[0][0]

conv3_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block5_0_bn (BatchNormali (None, 4, 4, 256) 1024 conv3_block4_concat[0][0]

__________________________________________________________________________________________________

conv3_block5_0_relu (Activation (None, 4, 4, 256) 0 conv3_block5_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block5_1_conv (Conv2D) (None, 4, 4, 128) 32768 conv3_block5_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block5_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block5_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block5_1_relu (Activation (None, 4, 4, 128) 0 conv3_block5_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block5_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block5_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block5_concat (Concatenat (None, 4, 4, 288) 0 conv3_block4_concat[0][0]

conv3_block5_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block6_0_bn (BatchNormali (None, 4, 4, 288) 1152 conv3_block5_concat[0][0]

__________________________________________________________________________________________________

conv3_block6_0_relu (Activation (None, 4, 4, 288) 0 conv3_block6_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block6_1_conv (Conv2D) (None, 4, 4, 128) 36864 conv3_block6_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block6_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block6_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block6_1_relu (Activation (None, 4, 4, 128) 0 conv3_block6_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block6_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block6_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block6_concat (Concatenat (None, 4, 4, 320) 0 conv3_block5_concat[0][0]

conv3_block6_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block7_0_bn (BatchNormali (None, 4, 4, 320) 1280 conv3_block6_concat[0][0]

__________________________________________________________________________________________________

conv3_block7_0_relu (Activation (None, 4, 4, 320) 0 conv3_block7_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block7_1_conv (Conv2D) (None, 4, 4, 128) 40960 conv3_block7_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block7_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block7_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block7_1_relu (Activation (None, 4, 4, 128) 0 conv3_block7_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block7_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block7_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block7_concat (Concatenat (None, 4, 4, 352) 0 conv3_block6_concat[0][0]

conv3_block7_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block8_0_bn (BatchNormali (None, 4, 4, 352) 1408 conv3_block7_concat[0][0]

__________________________________________________________________________________________________

conv3_block8_0_relu (Activation (None, 4, 4, 352) 0 conv3_block8_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block8_1_conv (Conv2D) (None, 4, 4, 128) 45056 conv3_block8_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block8_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block8_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block8_1_relu (Activation (None, 4, 4, 128) 0 conv3_block8_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block8_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block8_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block8_concat (Concatenat (None, 4, 4, 384) 0 conv3_block7_concat[0][0]

conv3_block8_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block9_0_bn (BatchNormali (None, 4, 4, 384) 1536 conv3_block8_concat[0][0]

__________________________________________________________________________________________________

conv3_block9_0_relu (Activation (None, 4, 4, 384) 0 conv3_block9_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block9_1_conv (Conv2D) (None, 4, 4, 128) 49152 conv3_block9_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block9_1_bn (BatchNormali (None, 4, 4, 128) 512 conv3_block9_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block9_1_relu (Activation (None, 4, 4, 128) 0 conv3_block9_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block9_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block9_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block9_concat (Concatenat (None, 4, 4, 416) 0 conv3_block8_concat[0][0]

conv3_block9_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block10_0_bn (BatchNormal (None, 4, 4, 416) 1664 conv3_block9_concat[0][0]

__________________________________________________________________________________________________

conv3_block10_0_relu (Activatio (None, 4, 4, 416) 0 conv3_block10_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block10_1_conv (Conv2D) (None, 4, 4, 128) 53248 conv3_block10_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block10_1_bn (BatchNormal (None, 4, 4, 128) 512 conv3_block10_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block10_1_relu (Activatio (None, 4, 4, 128) 0 conv3_block10_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block10_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block10_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block10_concat (Concatena (None, 4, 4, 448) 0 conv3_block9_concat[0][0]

conv3_block10_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block11_0_bn (BatchNormal (None, 4, 4, 448) 1792 conv3_block10_concat[0][0]

__________________________________________________________________________________________________

conv3_block11_0_relu (Activatio (None, 4, 4, 448) 0 conv3_block11_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block11_1_conv (Conv2D) (None, 4, 4, 128) 57344 conv3_block11_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block11_1_bn (BatchNormal (None, 4, 4, 128) 512 conv3_block11_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block11_1_relu (Activatio (None, 4, 4, 128) 0 conv3_block11_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block11_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block11_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block11_concat (Concatena (None, 4, 4, 480) 0 conv3_block10_concat[0][0]

conv3_block11_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block12_0_bn (BatchNormal (None, 4, 4, 480) 1920 conv3_block11_concat[0][0]

__________________________________________________________________________________________________

conv3_block12_0_relu (Activatio (None, 4, 4, 480) 0 conv3_block12_0_bn[0][0]

__________________________________________________________________________________________________

conv3_block12_1_conv (Conv2D) (None, 4, 4, 128) 61440 conv3_block12_0_relu[0][0]

__________________________________________________________________________________________________

conv3_block12_1_bn (BatchNormal (None, 4, 4, 128) 512 conv3_block12_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block12_1_relu (Activatio (None, 4, 4, 128) 0 conv3_block12_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block12_2_conv (Conv2D) (None, 4, 4, 32) 36864 conv3_block12_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block12_concat (Concatena (None, 4, 4, 512) 0 conv3_block11_concat[0][0]

conv3_block12_2_conv[0][0]

__________________________________________________________________________________________________

pool3_bn (BatchNormalization) (None, 4, 4, 512) 2048 conv3_block12_concat[0][0]

__________________________________________________________________________________________________

pool3_relu (Activation) (None, 4, 4, 512) 0 pool3_bn[0][0]

__________________________________________________________________________________________________

pool3_conv (Conv2D) (None, 4, 4, 256) 131072 pool3_relu[0][0]

__________________________________________________________________________________________________

pool3_pool (AveragePooling2D) (None, 2, 2, 256) 0 pool3_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_0_bn (BatchNormali (None, 2, 2, 256) 1024 pool3_pool[0][0]

__________________________________________________________________________________________________

conv4_block1_0_relu (Activation (None, 2, 2, 256) 0 conv4_block1_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_1_conv (Conv2D) (None, 2, 2, 128) 32768 conv4_block1_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_1_relu (Activation (None, 2, 2, 128) 0 conv4_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_concat (Concatenat (None, 2, 2, 288) 0 pool3_pool[0][0]

conv4_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_0_bn (BatchNormali (None, 2, 2, 288) 1152 conv4_block1_concat[0][0]

__________________________________________________________________________________________________

conv4_block2_0_relu (Activation (None, 2, 2, 288) 0 conv4_block2_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_1_conv (Conv2D) (None, 2, 2, 128) 36864 conv4_block2_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_1_relu (Activation (None, 2, 2, 128) 0 conv4_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_concat (Concatenat (None, 2, 2, 320) 0 conv4_block1_concat[0][0]

conv4_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_0_bn (BatchNormali (None, 2, 2, 320) 1280 conv4_block2_concat[0][0]

__________________________________________________________________________________________________

conv4_block3_0_relu (Activation (None, 2, 2, 320) 0 conv4_block3_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_1_conv (Conv2D) (None, 2, 2, 128) 40960 conv4_block3_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_1_relu (Activation (None, 2, 2, 128) 0 conv4_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_concat (Concatenat (None, 2, 2, 352) 0 conv4_block2_concat[0][0]

conv4_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_0_bn (BatchNormali (None, 2, 2, 352) 1408 conv4_block3_concat[0][0]

__________________________________________________________________________________________________

conv4_block4_0_relu (Activation (None, 2, 2, 352) 0 conv4_block4_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_1_conv (Conv2D) (None, 2, 2, 128) 45056 conv4_block4_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_1_relu (Activation (None, 2, 2, 128) 0 conv4_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_concat (Concatenat (None, 2, 2, 384) 0 conv4_block3_concat[0][0]

conv4_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_0_bn (BatchNormali (None, 2, 2, 384) 1536 conv4_block4_concat[0][0]

__________________________________________________________________________________________________

conv4_block5_0_relu (Activation (None, 2, 2, 384) 0 conv4_block5_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_1_conv (Conv2D) (None, 2, 2, 128) 49152 conv4_block5_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block5_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_1_relu (Activation (None, 2, 2, 128) 0 conv4_block5_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block5_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_concat (Concatenat (None, 2, 2, 416) 0 conv4_block4_concat[0][0]

conv4_block5_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_0_bn (BatchNormali (None, 2, 2, 416) 1664 conv4_block5_concat[0][0]

__________________________________________________________________________________________________

conv4_block6_0_relu (Activation (None, 2, 2, 416) 0 conv4_block6_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_1_conv (Conv2D) (None, 2, 2, 128) 53248 conv4_block6_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block6_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block6_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_1_relu (Activation (None, 2, 2, 128) 0 conv4_block6_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block6_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block6_concat (Concatenat (None, 2, 2, 448) 0 conv4_block5_concat[0][0]

conv4_block6_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block7_0_bn (BatchNormali (None, 2, 2, 448) 1792 conv4_block6_concat[0][0]

__________________________________________________________________________________________________

conv4_block7_0_relu (Activation (None, 2, 2, 448) 0 conv4_block7_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block7_1_conv (Conv2D) (None, 2, 2, 128) 57344 conv4_block7_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block7_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block7_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block7_1_relu (Activation (None, 2, 2, 128) 0 conv4_block7_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block7_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block7_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block7_concat (Concatenat (None, 2, 2, 480) 0 conv4_block6_concat[0][0]

conv4_block7_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block8_0_bn (BatchNormali (None, 2, 2, 480) 1920 conv4_block7_concat[0][0]

__________________________________________________________________________________________________

conv4_block8_0_relu (Activation (None, 2, 2, 480) 0 conv4_block8_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block8_1_conv (Conv2D) (None, 2, 2, 128) 61440 conv4_block8_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block8_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block8_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block8_1_relu (Activation (None, 2, 2, 128) 0 conv4_block8_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block8_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block8_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block8_concat (Concatenat (None, 2, 2, 512) 0 conv4_block7_concat[0][0]

conv4_block8_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block9_0_bn (BatchNormali (None, 2, 2, 512) 2048 conv4_block8_concat[0][0]

__________________________________________________________________________________________________

conv4_block9_0_relu (Activation (None, 2, 2, 512) 0 conv4_block9_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block9_1_conv (Conv2D) (None, 2, 2, 128) 65536 conv4_block9_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block9_1_bn (BatchNormali (None, 2, 2, 128) 512 conv4_block9_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block9_1_relu (Activation (None, 2, 2, 128) 0 conv4_block9_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block9_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block9_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block9_concat (Concatenat (None, 2, 2, 544) 0 conv4_block8_concat[0][0]

conv4_block9_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block10_0_bn (BatchNormal (None, 2, 2, 544) 2176 conv4_block9_concat[0][0]

__________________________________________________________________________________________________

conv4_block10_0_relu (Activatio (None, 2, 2, 544) 0 conv4_block10_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block10_1_conv (Conv2D) (None, 2, 2, 128) 69632 conv4_block10_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block10_1_bn (BatchNormal (None, 2, 2, 128) 512 conv4_block10_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block10_1_relu (Activatio (None, 2, 2, 128) 0 conv4_block10_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block10_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block10_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block10_concat (Concatena (None, 2, 2, 576) 0 conv4_block9_concat[0][0]

conv4_block10_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block11_0_bn (BatchNormal (None, 2, 2, 576) 2304 conv4_block10_concat[0][0]

__________________________________________________________________________________________________

conv4_block11_0_relu (Activatio (None, 2, 2, 576) 0 conv4_block11_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block11_1_conv (Conv2D) (None, 2, 2, 128) 73728 conv4_block11_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block11_1_bn (BatchNormal (None, 2, 2, 128) 512 conv4_block11_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block11_1_relu (Activatio (None, 2, 2, 128) 0 conv4_block11_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block11_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block11_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block11_concat (Concatena (None, 2, 2, 608) 0 conv4_block10_concat[0][0]

conv4_block11_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block12_0_bn (BatchNormal (None, 2, 2, 608) 2432 conv4_block11_concat[0][0]

__________________________________________________________________________________________________

conv4_block12_0_relu (Activatio (None, 2, 2, 608) 0 conv4_block12_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block12_1_conv (Conv2D) (None, 2, 2, 128) 77824 conv4_block12_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block12_1_bn (BatchNormal (None, 2, 2, 128) 512 conv4_block12_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block12_1_relu (Activatio (None, 2, 2, 128) 0 conv4_block12_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block12_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block12_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block12_concat (Concatena (None, 2, 2, 640) 0 conv4_block11_concat[0][0]

conv4_block12_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block13_0_bn (BatchNormal (None, 2, 2, 640) 2560 conv4_block12_concat[0][0]

__________________________________________________________________________________________________

conv4_block13_0_relu (Activatio (None, 2, 2, 640) 0 conv4_block13_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block13_1_conv (Conv2D) (None, 2, 2, 128) 81920 conv4_block13_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block13_1_bn (BatchNormal (None, 2, 2, 128) 512 conv4_block13_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block13_1_relu (Activatio (None, 2, 2, 128) 0 conv4_block13_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block13_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block13_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block13_concat (Concatena (None, 2, 2, 672) 0 conv4_block12_concat[0][0]

conv4_block13_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block14_0_bn (BatchNormal (None, 2, 2, 672) 2688 conv4_block13_concat[0][0]

__________________________________________________________________________________________________

conv4_block14_0_relu (Activatio (None, 2, 2, 672) 0 conv4_block14_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block14_1_conv (Conv2D) (None, 2, 2, 128) 86016 conv4_block14_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block14_1_bn (BatchNormal (None, 2, 2, 128) 512 conv4_block14_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block14_1_relu (Activatio (None, 2, 2, 128) 0 conv4_block14_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block14_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block14_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block14_concat (Concatena (None, 2, 2, 704) 0 conv4_block13_concat[0][0]

conv4_block14_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block15_0_bn (BatchNormal (None, 2, 2, 704) 2816 conv4_block14_concat[0][0]

__________________________________________________________________________________________________

conv4_block15_0_relu (Activatio (None, 2, 2, 704) 0 conv4_block15_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block15_1_conv (Conv2D) (None, 2, 2, 128) 90112 conv4_block15_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block15_1_bn (BatchNormal (None, 2, 2, 128) 512 conv4_block15_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block15_1_relu (Activatio (None, 2, 2, 128) 0 conv4_block15_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block15_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block15_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block15_concat (Concatena (None, 2, 2, 736) 0 conv4_block14_concat[0][0]

conv4_block15_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block16_0_bn (BatchNormal (None, 2, 2, 736) 2944 conv4_block15_concat[0][0]

__________________________________________________________________________________________________

conv4_block16_0_relu (Activatio (None, 2, 2, 736) 0 conv4_block16_0_bn[0][0]

__________________________________________________________________________________________________

conv4_block16_1_conv (Conv2D) (None, 2, 2, 128) 94208 conv4_block16_0_relu[0][0]

__________________________________________________________________________________________________

conv4_block16_1_bn (BatchNormal (None, 2, 2, 128) 512 conv4_block16_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block16_1_relu (Activatio (None, 2, 2, 128) 0 conv4_block16_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block16_2_conv (Conv2D) (None, 2, 2, 32) 36864 conv4_block16_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block16_concat (Concatena (None, 2, 2, 768) 0 conv4_block15_concat[0][0]

conv4_block16_2_conv[0][0]

__________________________________________________________________________________________________

pool4_bn (BatchNormalization) (None, 2, 2, 768) 3072 conv4_block16_concat[0][0]

__________________________________________________________________________________________________

pool4_relu (Activation) (None, 2, 2, 768) 0 pool4_bn[0][0]

__________________________________________________________________________________________________

pool4_conv (Conv2D) (None, 2, 2, 384) 294912 pool4_relu[0][0]

__________________________________________________________________________________________________

pool4_pool (AveragePooling2D) (None, 1, 1, 384) 0 pool4_conv[0][0]

__________________________________________________________________________________________________

conv5_block1_0_bn (BatchNormali (None, 1, 1, 384) 1536 pool4_pool[0][0]

__________________________________________________________________________________________________

conv5_block1_0_relu (Activation (None, 1, 1, 384) 0 conv5_block1_0_bn[0][0]

__________________________________________________________________________________________________

conv5_block1_1_conv (Conv2D) (None, 1, 1, 128) 49152 conv5_block1_0_relu[0][0]

__________________________________________________________________________________________________

conv5_block1_1_bn (BatchNormali (None, 1, 1, 128) 512 conv5_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block1_1_relu (Activation (None, 1, 1, 128) 0 conv5_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block1_2_conv (Conv2D) (None, 1, 1, 32) 36864 conv5_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block1_concat (Concatenat (None, 1, 1, 416) 0 pool4_pool[0][0]

conv5_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block2_0_bn (BatchNormali (None, 1, 1, 416) 1664 conv5_block1_concat[0][0]

__________________________________________________________________________________________________

conv5_block2_0_relu (Activation (None, 1, 1, 416) 0 conv5_block2_0_bn[0][0]

__________________________________________________________________________________________________

conv5_block2_1_conv (Conv2D) (None, 1, 1, 128) 53248 conv5_block2_0_relu[0][0]

__________________________________________________________________________________________________

conv5_block2_1_bn (BatchNormali (None, 1, 1, 128) 512 conv5_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block2_1_relu (Activation (None, 1, 1, 128) 0 conv5_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block2_2_conv (Conv2D) (None, 1, 1, 32) 36864 conv5_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block2_concat (Concatenat (None, 1, 1, 448) 0 conv5_block1_concat[0][0]

conv5_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block3_0_bn (BatchNormali (None, 1, 1, 448) 1792 conv5_block2_concat[0][0]

__________________________________________________________________________________________________

conv5_block3_0_relu (Activation (None, 1, 1, 448) 0 conv5_block3_0_bn[0][0]

__________________________________________________________________________________________________

conv5_block3_1_conv (Conv2D) (None, 1, 1, 128) 57344 conv5_block3_0_relu[0][0]

__________________________________________________________________________________________________

conv5_block3_1_bn (BatchNormali (None, 1, 1, 128) 512 conv5_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block3_1_relu (Activation (None, 1, 1, 128) 0 conv5_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block3_2_conv (Conv2D) (None, 1, 1, 32) 36864 conv5_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block3_concat (Concatenat (None, 1, 1, 480) 0 conv5_block2_concat[0][0]

conv5_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block4_0_bn (BatchNormali (None, 1, 1, 480) 1920 conv5_block3_concat[0][0]

__________________________________________________________________________________________________

conv5_block4_0_relu (Activation (None, 1, 1, 480) 0 conv5_block4_0_bn[0][0]

__________________________________________________________________________________________________

conv5_block4_1_conv (Conv2D) (None, 1, 1, 128) 61440 conv5_block4_0_relu[0][0]

__________________________________________________________________________________________________

conv5_block4_1_bn (BatchNormali (None, 1, 1, 128) 512 conv5_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block4_1_relu (Activation (None, 1, 1, 128) 0 conv5_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block4_2_conv (Conv2D) (None, 1, 1, 32) 36864 conv5_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block4_concat (Concatenat (None, 1, 1, 512) 0 conv5_block3_concat[0][0]

conv5_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block5_0_bn (BatchNormali (None, 1, 1, 512) 2048 conv5_block4_concat[0][0]

__________________________________________________________________________________________________

conv5_block5_0_relu (Activation (None, 1, 1, 512) 0 conv5_block5_0_bn[0][0]

__________________________________________________________________________________________________

conv5_block5_1_conv (Conv2D) (None, 1, 1, 128) 65536 conv5_block5_0_relu[0][0]

__________________________________________________________________________________________________

conv5_block5_1_bn (BatchNormali (None, 1, 1, 128) 512 conv5_block5_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block5_1_relu (Activation (None, 1, 1, 128) 0 conv5_block5_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block5_2_conv (Conv2D) (None, 1, 1, 32) 36864 conv5_block5_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block5_concat (Concatenat (None, 1, 1, 544) 0 conv5_block4_concat[0][0]

conv5_block5_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block6_0_bn (BatchNormali (None, 1, 1, 544) 2176 conv5_block5_concat[0][0]

__________________________________________________________________________________________________

conv5_block6_0_relu (Activation (None, 1, 1, 544) 0 conv5_block6_0_bn[0][0]

__________________________________________________________________________________________________

conv5_block6_1_conv (Conv2D) (None, 1, 1, 128) 69632 conv5_block6_0_relu[0][0]

__________________________________________________________________________________________________

conv5_block6_1_bn (BatchNormali (None, 1, 1, 128) 512 conv5_block6_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block6_1_relu (Activation (None, 1, 1, 128) 0 conv5_block6_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block6_2_conv (Conv2D) (None, 1, 1, 32) 36864 conv5_block6_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block6_concat (Concatenat (None, 1, 1, 576) 0 conv5_block5_concat[0][0]

conv5_block6_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block7_0_bn (BatchNormali (None, 1, 1, 576) 2304 conv5_block6_concat[0][0]

__________________________________________________________________________________________________

conv5_block7_0_relu (Activation (None, 1, 1, 576) 0 conv5_block7_0_bn[0][0]

__________________________________________________________________________________________________

conv5_block7_1_conv (Conv2D) (None, 1, 1, 128) 73728 conv5_block7_0_relu[0][0]

__________________________________________________________________________________________________

conv5_block7_1_bn (BatchNormali (None, 1, 1, 128) 512 conv5_block7_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block7_1_relu (Activation (None, 1, 1, 128) 0 conv5_block7_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block7_2_conv (Conv2D) (None, 1, 1, 32) 36864 conv5_block7_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block7_concat (Concatenat (None, 1, 1, 608) 0 conv5_block6_concat[0][0]

conv5_block7_2_conv[0][0]

__________________________________________________________________________________________________

conv5_block8_0_bn (BatchNormali (None, 1, 1, 608) 2432 conv5_block7_concat[0][0]

__________________________________________________________________________________________________

conv5_block8_0_relu (Activation (None, 1, 1, 608) 0 conv5_block8_0_bn[0][0]

__________________________________________________________________________________________________

conv5_block8_1_conv (Conv2D) (None, 1, 1, 128) 77824 conv5_block8_0_relu[0][0]

__________________________________________________________________________________________________

conv5_block8_1_bn (BatchNormali (None, 1, 1, 128) 512 conv5_block8_1_conv[0][0]

__________________________________________________________________________________________________

conv5_block8_1_relu (Activation (None, 1, 1, 128) 0 conv5_block8_1_bn[0][0]

__________________________________________________________________________________________________

conv5_block8_2_conv (Conv2D) (None, 1, 1, 32) 36864 conv5_block8_1_relu[0][0]

__________________________________________________________________________________________________

conv5_block8_concat (Concatenat (None, 1, 1, 640) 0 conv5_block7_concat[0][0]

conv5_block8_2_conv[0][0]

__________________________________________________________________________________________________

bn (BatchNormalization) (None, 1, 1, 640) 2560 conv5_block8_concat[0][0]

__________________________________________________________________________________________________

avg_pool (GlobalAveragePooling2 (None, 640) 0 bn[0][0]

__________________________________________________________________________________________________

dense_1 (Dense) (None, 500) 320500 avg_pool[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 500) 0 dense_1[0][0]

__________________________________________________________________________________________________

dropout_1 (Dropout) (None, 500) 0 activation_1[0][0]

__________________________________________________________________________________________________

dense_2 (Dense) (None, 100) 50100 dropout_1[0][0]

==================================================================================================

Total params: 4,584,424

Trainable params: 4,536,360

Non-trainable params: 48,064

__________________________________________________________________________________________________

Recordemos que la arquitectura ResNet tenía aproximadamente 25 millones de parámetros a entrenar. Esto quiere decir que hemos aumentado la profundidad pero hemos reducido el número de parámetros a entrenar con 4 millones y medio.

Bien, dicho esto, pasamos a entrenar el modelo:

cdense = custom_dense_model.fit(x=x_train, y=y_train, batch_size=32, epochs=10, verbose=1, validation_data=(x_test, y_test), shuffle=True)

Train on 50000 samples, validate on 10000 samples

Epoch 1/10

50000/50000 [==============================] - 328s 7ms/step - loss: 4.2465 - acc: 0.0707 - mean_squared_error: 0.0098 - val_loss: 3.9126 - val_acc: 0.1075 - val_mean_squared_error: 0.0096

Epoch 2/10

50000/50000 [==============================] - 321s 6ms/step - loss: 3.7411 - acc: 0.1331 - mean_squared_error: 0.0095 - val_loss: 3.6431 - val_acc: 0.1434 - val_mean_squared_error: 0.0094

Epoch 3/10

50000/50000 [==============================] - 322s 6ms/step - loss: 3.4654 - acc: 0.1751 - mean_squared_error: 0.0092 - val_loss: 3.4077 - val_acc: 0.1869 - val_mean_squared_error: 0.0091

Epoch 4/10

50000/50000 [==============================] - 325s 7ms/step - loss: 3.2441 - acc: 0.2161 - mean_squared_error: 0.0090 - val_loss: 3.2245 - val_acc: 0.2252 - val_mean_squared_error: 0.0089

Epoch 5/10

50000/50000 [==============================] - 324s 6ms/step - loss: 3.0505 - acc: 0.2472 - mean_squared_error: 0.0087 - val_loss: 3.0746 - val_acc: 0.2480 - val_mean_squared_error: 0.0087

Epoch 6/10

50000/50000 [==============================] - 327s 7ms/step - loss: 2.8631 - acc: 0.2835 - mean_squared_error: 0.0084 - val_loss: 2.9652 - val_acc: 0.2710 - val_mean_squared_error: 0.0085

Epoch 7/10

50000/50000 [==============================] - 326s 7ms/step - loss: 2.7206 - acc: 0.3130 - mean_squared_error: 0.0082 - val_loss: 2.8479 - val_acc: 0.2935 - val_mean_squared_error: 0.0083

Epoch 8/10

50000/50000 [==============================] - 329s 7ms/step - loss: 2.5673 - acc: 0.3409 - mean_squared_error: 0.0079 - val_loss: 2.7761 - val_acc: 0.3077 - val_mean_squared_error: 0.0082

Epoch 9/10

50000/50000 [==============================] - 325s 7ms/step - loss: 2.4398 - acc: 0.3682 - mean_squared_error: 0.0077 - val_loss: 2.7609 - val_acc: 0.3194 - val_mean_squared_error: 0.0082

Epoch 10/10

50000/50000 [==============================] - 327s 7ms/step - loss: 2.3162 - acc: 0.3963 - mean_squared_error: 0.0074 - val_loss: 2.6835 - val_acc: 0.3373 - val_mean_squared_error: 0.0080

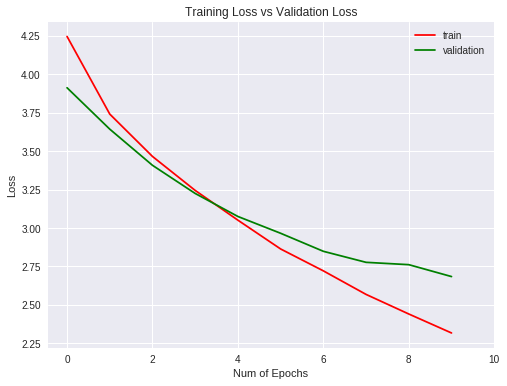

Veamos las métricas obtenidas para el entrenamiento y validación gráficamente:

plt.figure(0)

plt.plot(cdense.history['acc'],'r')

plt.plot(cdense.history['val_acc'],'g')

plt.xticks(np.arange(0, 11, 2.0))

plt.rcParams['figure.figsize'] = (8, 6)

plt.xlabel("Num of Epochs")

plt.ylabel("Accuracy")

plt.title("Training Accuracy vs Validation Accuracy")

plt.legend(['train','validation'])

plt.figure(1)

plt.plot(cdense.history['loss'],'r')

plt.plot(cdense.history['val_loss'],'g')

plt.xticks(np.arange(0, 11, 2.0))

plt.rcParams['figure.figsize'] = (8, 6)

plt.xlabel("Num of Epochs")

plt.ylabel("Loss")

plt.title("Training Loss vs Validation Loss")

plt.legend(['train','validation'])

plt.show()

El entrenamiento ha dado muy buenos resultados y aunque no ha generalizado bien, los resultados a priori parecen mejores. Veámoslo a continuación.

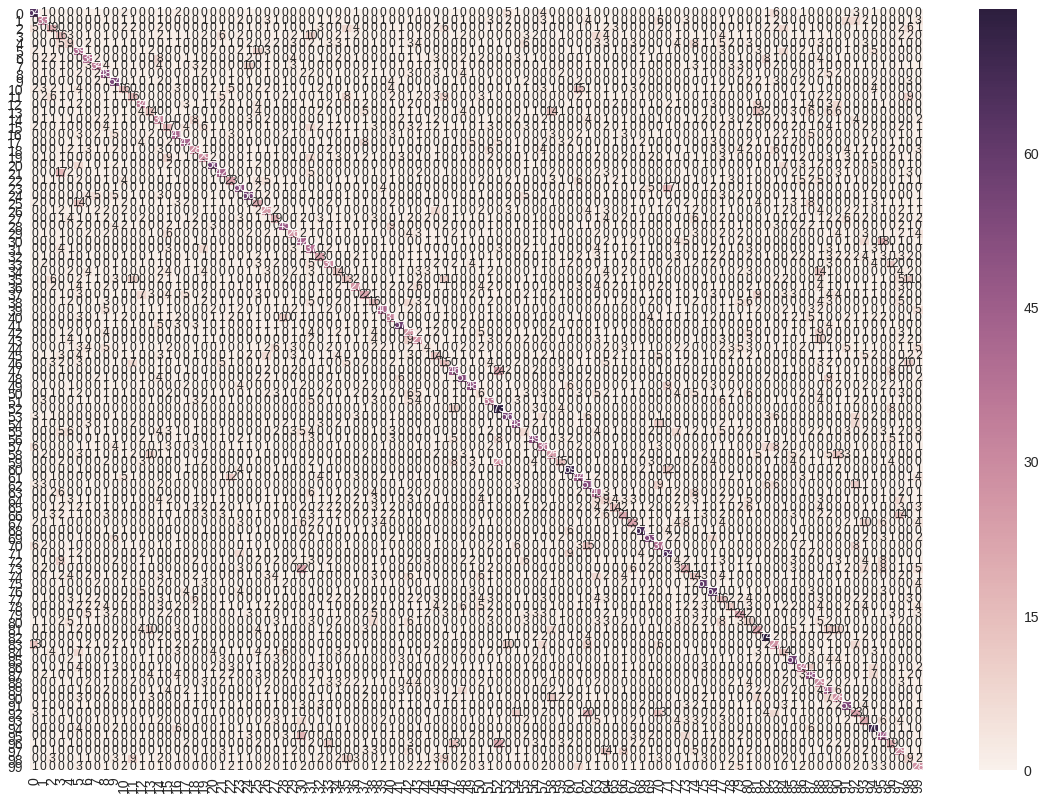

Matriz de confusión

Pasemos ahora a ver la matriz de confusión y las métricas de Accuracy, Recall y F1-score.

Vamos a hacer una predicción sobre el dataset de validación y, a partir de ésta, generamos la matriz de confusión y mostramos las métricas mencionadas anteriormente:

cdense_pred = custom_dense_model.predict(x_test, batch_size=32, verbose=1)

cdense_predicted = np.argmax(cdense_pred, axis=1)

cdense_cm = confusion_matrix(np.argmax(y_test, axis=1), cdense_predicted)

# Visualizing of confusion matrix

cdense_df_cm = pd.DataFrame(cdense_cm, range(100), range(100))

plt.figure(figsize = (20,14))

sn.set(font_scale=1.4) #for label size

sn.heatmap(cdense_df_cm, annot=True, annot_kws={"size": 12}) # font size

plt.show()

Y por último, mostramos las métricas:

cdense_report = classification_report(np.argmax(y_test, axis=1), cdense_predicted)

print(cdense_report)

precision recall f1-score support

0 0.55 0.64 0.59 100

1 0.41 0.33 0.36 100

2 0.29 0.19 0.23 100

3 0.15 0.16 0.16 100

4 0.12 0.09 0.10 100

5 0.25 0.39 0.31 100

6 0.39 0.38 0.38 100

7 0.46 0.34 0.39 100

8 0.37 0.48 0.42 100

9 0.44 0.54 0.48 100

10 0.25 0.16 0.19 100

11 0.24 0.16 0.19 100

12 0.39 0.34 0.36 100

13 0.23 0.14 0.17 100

14 0.24 0.30 0.27 100

15 0.20 0.17 0.18 100

16 0.43 0.41 0.42 100

17 0.52 0.42 0.46 100

18 0.30 0.28 0.29 100

19 0.30 0.29 0.30 100

20 0.63 0.56 0.59 100

21 0.40 0.42 0.41 100

22 0.32 0.23 0.27 100

23 0.50 0.50 0.50 100

24 0.50 0.56 0.53 100

25 0.21 0.20 0.20 100

26 0.24 0.25 0.25 100

27 0.24 0.19 0.21 100

28 0.45 0.45 0.45 100

29 0.30 0.26 0.28 100

30 0.31 0.42 0.36 100

31 0.25 0.37 0.30 100

32 0.27 0.23 0.25 100

33 0.32 0.30 0.31 100

34 0.17 0.14 0.15 100

35 0.19 0.13 0.15 100

36 0.35 0.27 0.31 100

37 0.26 0.22 0.24 100

38 0.18 0.16 0.17 100

39 0.54 0.40 0.46 100

40 0.32 0.31 0.31 100

41 0.58 0.57 0.57 100

42 0.19 0.25 0.22 100

43 0.28 0.27 0.27 100

44 0.09 0.04 0.06 100

45 0.16 0.14 0.15 100

46 0.19 0.15 0.17 100

47 0.42 0.46 0.44 100

48 0.45 0.51 0.48 100

49 0.54 0.48 0.51 100

50 0.08 0.06 0.07 100

51 0.30 0.33 0.32 100

52 0.43 0.73 0.54 100

53 0.60 0.56 0.58 100

54 0.49 0.48 0.49 100

55 0.09 0.07 0.08 100

56 0.52 0.49 0.51 100

57 0.32 0.36 0.34 100

58 0.29 0.25 0.27 100

59 0.28 0.15 0.20 100

60 0.64 0.69 0.67 100

61 0.40 0.44 0.42 100

62 0.37 0.51 0.43 100

63 0.31 0.40 0.35 100

64 0.09 0.09 0.09 100

65 0.27 0.14 0.18 100

66 0.25 0.21 0.23 100

67 0.33 0.23 0.27 100

68 0.58 0.67 0.62 100

69 0.51 0.53 0.52 100

70 0.34 0.37 0.35 100

71 0.52 0.64 0.58 100

72 0.05 0.04 0.05 100

73 0.27 0.21 0.24 100

74 0.14 0.14 0.14 100

75 0.50 0.61 0.55 100

76 0.54 0.62 0.58 100

77 0.14 0.16 0.15 100

78 0.18 0.11 0.14 100

79 0.29 0.24 0.26 100

80 0.09 0.10 0.09 100

81 0.24 0.22 0.23 100

82 0.57 0.74 0.64 100

83 0.26 0.27 0.26 100

84 0.17 0.14 0.15 100

85 0.43 0.57 0.49 100

86 0.37 0.34 0.35 100

87 0.37 0.48 0.42 100

88 0.18 0.29 0.23 100

89 0.26 0.41 0.32 100

90 0.23 0.28 0.25 100

91 0.43 0.53 0.48 100

92 0.22 0.23 0.22 100

93 0.17 0.21 0.19 100

94 0.49 0.70 0.57 100

95 0.35 0.44 0.39 100

96 0.25 0.19 0.22 100

97 0.21 0.29 0.25 100

98 0.10 0.09 0.10 100

99 0.25 0.28 0.26 100

avg / total 0.32 0.33 0.32 10000

Curva ROC (tasas de verdaderos positivos y falsos positivos)

Vamos a codificar la curva ROC

from sklearn.datasets import make_classification

from sklearn.preprocessing import label_binarize

from scipy import interp

from itertools import cycle

n_classes = 100

from sklearn.metrics import roc_curve, auc

# Plot linewidth.

lw = 2

# Compute ROC curve and ROC area for each class

fpr = dict()

tpr = dict()

roc_auc = dict()

for i in range(n_classes):

fpr[i], tpr[i], _ = roc_curve(y_test[:, i], cdense_pred[:, i])

roc_auc[i] = auc(fpr[i], tpr[i])

# Compute micro-average ROC curve and ROC area

fpr["micro"], tpr["micro"], _ = roc_curve(y_test.ravel(), cdense_pred.ravel())

roc_auc["micro"] = auc(fpr["micro"], tpr["micro"])

# Compute macro-average ROC curve and ROC area

# First aggregate all false positive rates

all_fpr = np.unique(np.concatenate([fpr[i] for i in range(n_classes)]))

# Then interpolate all ROC curves at this points

mean_tpr = np.zeros_like(all_fpr)

for i in range(n_classes):

mean_tpr += interp(all_fpr, fpr[i], tpr[i])

# Finally average it and compute AUC

mean_tpr /= n_classes

fpr["macro"] = all_fpr

tpr["macro"] = mean_tpr

roc_auc["macro"] = auc(fpr["macro"], tpr["macro"])

# Plot all ROC curves

plt.figure(1)

plt.plot(fpr["micro"], tpr["micro"],

label='micro-average ROC curve (area = {0:0.2f})'

''.format(roc_auc["micro"]),

color='deeppink', linestyle=':', linewidth=4)

plt.plot(fpr["macro"], tpr["macro"],

label='macro-average ROC curve (area = {0:0.2f})'

''.format(roc_auc["macro"]),

color='navy', linestyle=':', linewidth=4)

colors = cycle(['aqua', 'darkorange', 'cornflowerblue'])

for i, color in zip(range(n_classes-97), colors):

plt.plot(fpr[i], tpr[i], color=color, lw=lw,

label='ROC curve of class {0} (area = {1:0.2f})'

''.format(i, roc_auc[i]))

plt.plot([0, 1], [0, 1], 'k--', lw=lw)

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Some extension of Receiver operating characteristic to multi-class')

plt.legend(loc="lower right")

plt.show()

# Zoom in view of the upper left corner.

plt.figure(2)

plt.xlim(0, 0.2)

plt.ylim(0.8, 1)

plt.plot(fpr["micro"], tpr["micro"],

label='micro-average ROC curve (area = {0:0.2f})'

''.format(roc_auc["micro"]),

color='deeppink', linestyle=':', linewidth=4)

plt.plot(fpr["macro"], tpr["macro"],

label='macro-average ROC curve (area = {0:0.2f})'

''.format(roc_auc["macro"]),

color='navy', linestyle=':', linewidth=4)

colors = cycle(['aqua', 'darkorange', 'cornflowerblue'])

for i, color in zip(range(3), colors):

plt.plot(fpr[i], tpr[i], color=color, lw=lw,

label='ROC curve of class {0} (area = {1:0.2f})'

''.format(i, roc_auc[i]))

plt.plot([0, 1], [0, 1], 'k--', lw=lw)

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Some extension of Receiver operating characteristic to multi-class')

plt.legend(loc="lower right")

plt.show()

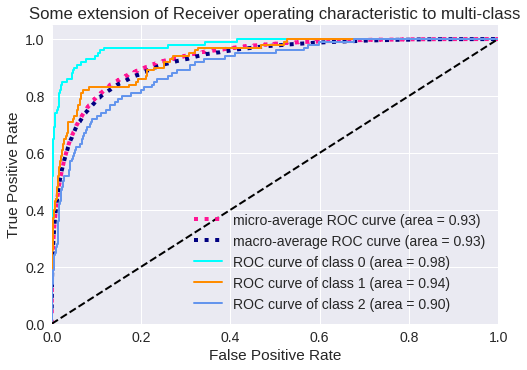

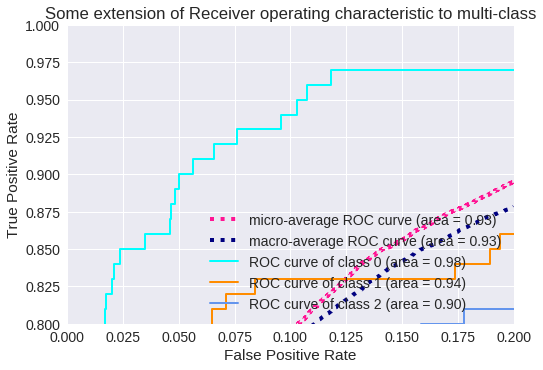

El resultado para tres clases se muestra en los siguientes gráficos:

Salvaremos los datos del histórico de entrenamiento para compararlos con otros modelos. Además, vamos a salvar el modelo con los pesos entrenados para usarlos en el futuro:

#Modelo

custom_dense_model.save(path_base + '/cdense.h5')

#Histórico

with open(path_base + '/cdense_history.txt', 'wb') as file_pi:

pickle.dump(cdense.history, file_pi)

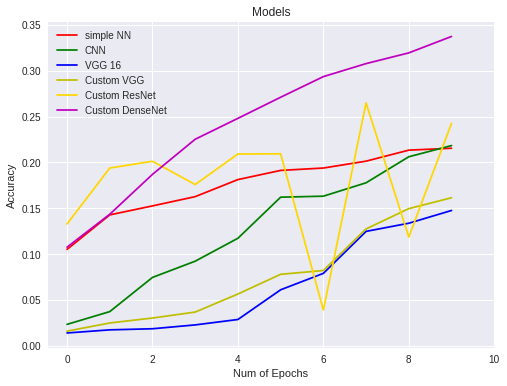

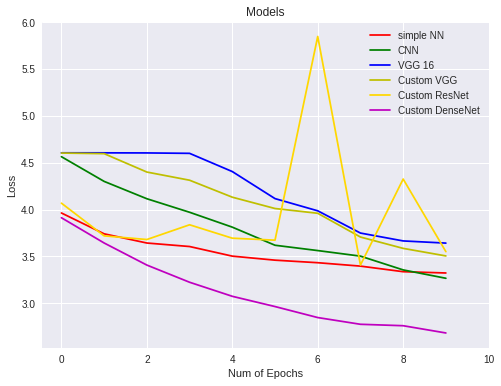

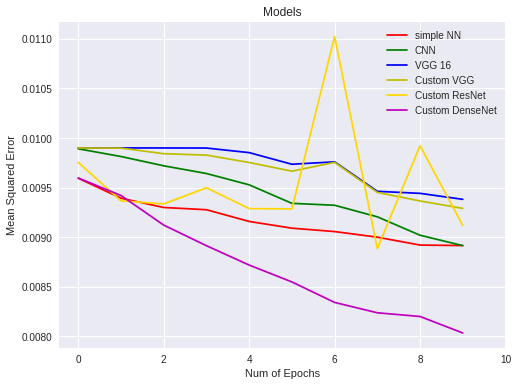

A continuación, vamos a comparar las métricas con los modelos anteriores:

plt.figure(0)

plt.plot(snn_history['val_acc'],'r')

plt.plot(scnn_history['val_acc'],'g')

plt.plot(vgg16_history['val_acc'],'b')

plt.plot(cvgg16_history['val_acc'],'y')

plt.plot(crn50_history['val_acc'],'gold')

plt.plot(cdense_history['val_acc'],'m')

plt.xticks(np.arange(0, 11, 2.0))

plt.rcParams['figure.figsize'] = (8, 6)

plt.xlabel("Num of Epochs")

plt.ylabel("Accuracy")

plt.title("Models")

plt.legend(['simple NN','CNN','VGG 16','Custom VGG','Custom ResNet', 'Custom DenseNet'])

plt.figure(1)

plt.plot(snn_history['val_loss'],'r')

plt.plot(scnn_history['val_loss'],'g')

plt.plot(vgg16_history['val_loss'],'b')